The first time I opened ChatGPT, I had no idea what I was doing or how I was supposed to work with it.

After many hours of watching videos, playing with many variations of the suggestions included in 20 MIND-BLOWING CHATGPT PROMPTS YOU MUST TRY OR ELSE clickbait articles, and just noodling around on my own, I came up with this talk that explains prompt engineering to anyone.

Ah, what is prompt engineering, you may be asking yourself? Prompt engineering is the process of optimizing how we ask questions or give tasks to AI models like ChatGPT to get better results.

This is the result of a talk that I gave at the 2023 AppliedAI Conference in Minneapolis, MN. You can find the slides for this talk here.

Regardless of your skill level, by the end of this blog post, you will be read to write advanced-level prompts. My background is in explaining complex technical topics in easy-to-understand terms, so if you are already a PhD in working with large language models, this may not be the blog post for you.

Okay, let's get started!

I know nothing about prompt engineering.

That's just fine! Let's get a couple definitions out of the way.

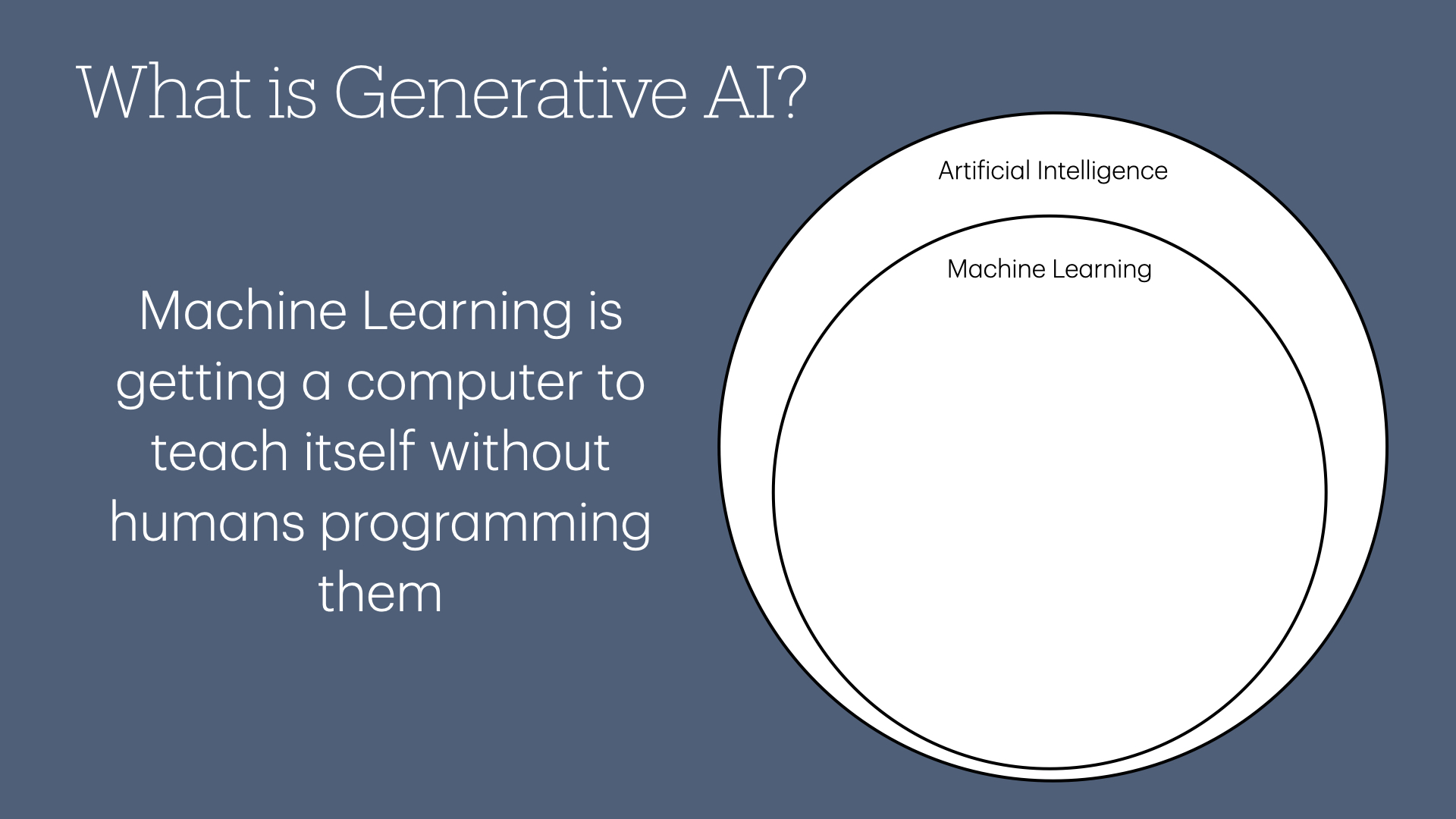

Large language model (LLM)

Imagine you have a really smart friend who knows a lot about words and can talk to you about anything you want. This friend has read thousands and thousands of books, articles, and stories from all around the world. They have learned so much about how people talk and write.

This smart friend is like a large language model. It is a computer program that has been trained on a lot of text to understand language and help people with their questions and tasks. It's like having a very knowledgeable robot friend who can give you information and have conversations with you.

While it may seem like a magic trick, it's actually a result of extensive programming and training on massive amounts of text data.

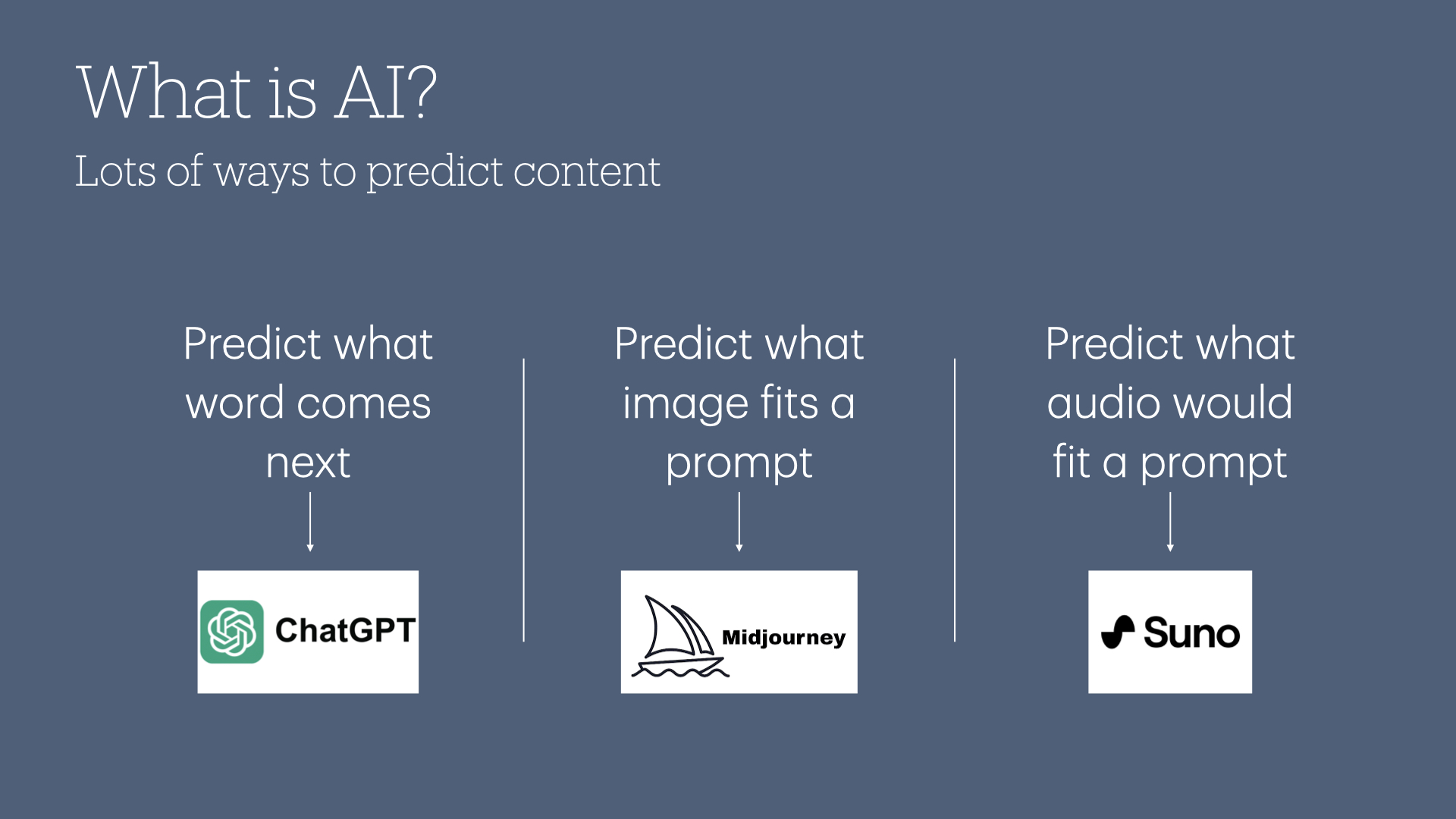

What LLMs are essentially doing is, one word at a time, picking the most likely word that would appear next in that sentence.

Read that last again.

It's just guessing one word at a time at what the next word will be.

That's a lot of words, Tim. Give me a demonstration!

Let's say we feed in a prompt like this:

I'm going to the store to pick up a gallon of [blank]

You might have an idea of what the next best word is. Here's what GPT-4 would say is the next most likely word to appear:

- Milk (50%)

- Water (20%)

- Ice cream (15%)

- Gas (10%)

- Paint (5%)

I would've said "milk," personally... but all those other words make sense as well, don't they?

What would happen if we add one word to that prompt?

I'm going to the hardware store to pick up a gallon of [blank]

I bet a different word comes to mind to fill in that blank. Here's what the next word is likely to be according to GPT-4:

- Paint (60%)

- Gasoline (20%)

- Cleaning solution (10%)

- Glue (5%)

- Water (5%)

All of those percentages are based on what the AI has learned from training on a massive amount of text data. It doesn't have opinions or preferences; it's just guessing based on patterns it has observed.

Prompt Engineering

You know how we changed one word in our prompt to get a different set of answers? That's prompt engineering!

A textbook definition might look like this:

Prompt engineering is optimizing an AI prompt for an accurate, relevant, and actionable response.

A less geeky definition might look like this:

Prompt engineering is getting really good at telling an AI what we want so it can give us back the best possible response.

A prompt engineer also understands the underlying patterns in how the AI works. Now that you have made it this far, you are ready to actually tackle those underlying patters!

I understand the concept.

Wonderful! You're well on your way to becoming a prompt engineer. Let's get a little more in depth.

When it comes to prompt engineering, you will want to keep four big points in mind:

- Write clear and specific instructions

- Give the model time to reason

- Understand the limitations of the technology

- Always be iterating

Let's tackle all of these one at a time.

Write clear and specific instructions

My years of training at the prestigious University of Minnesota's journalism school taught me the importance of writing clearly when writing news articles.

Yet unlike optimizing your words for a small column in a newspaper, when it comes to large language models: clear does not mean short.

Here's a few tips for making your prompts clear and specific:

Tip 1: Flattery is everything

Let's start with this prompt:

Give me three marketing campaign ideas.

Here's what you might get back:

Sure, here are three marketing campaign ideas:

1. Social Media Influencer Campaign: Collaborate with popular social media influencers who have a large following in your target market to promote your product or service. This campaign could include sponsored posts, product reviews, or influencer takeovers of your social media accounts.

2. Cause Marketing Campaign: Create a campaign that ties your product or service to a cause that aligns with your brand values. For example, if you sell eco-friendly products, you could partner with an environmental nonprofit and donate a portion of your profits to their cause. This type of campaign can help you connect with socially conscious consumers and build brand loyalty.

3. Interactive Digital Campaign: Create an interactive digital experience that engages your target audience in a fun and unique way. For example, you could create a game or quiz that ties into your product or service, or a virtual reality experience that showcases your brand's values or products. This type of campaign can help you stand out from your competitors and generate buzz on social media.

I think we could do better. These tips are quite generic and obvious to anyone who spent twenty minutes googling "marketing campaign ideas."

What about a prompt like this?

You are an expert in B2B marketing with a special focus on custom homebuilders who construct multi-million dollar homes. Give me three marketing campaign ideas.

Here's what you might get back:

Sure, here are three marketing campaign ideas for custom homebuilders who construct multi-million dollar homes:

1. Luxury Lifestyle Content Campaign: Create a content marketing campaign that focuses on the luxury lifestyle that your homes offer. This could include blog posts, videos, and social media posts that showcase the unique features and amenities of your homes, as well as the surrounding communities. You could also partner with luxury lifestyle publications and influencers to reach a wider audience.

2. Custom Home Showcase Event: Host a custom home showcase event to give potential buyers an up-close look at the quality and craftsmanship of your homes. This could include a tour of a recently completed home, a meet-and-greet with the builders and designers, and a networking event with other high-end vendors such as interior designers, luxury car dealerships, and private jet companies.

3. Personalized Direct Mail Campaign: Create a personalized direct mail campaign to reach out to high-net-worth individuals in your target market. This could include sending a custom-designed brochure that highlights your unique selling propositions, as well as a personalized letter that speaks to their specific needs and desires. You could also consider sending luxury gift boxes with branded items such as champagne flutes or gourmet chocolates to make a lasting impression.

These ideas are so much better because you placed the large language model in a specific role. This allows it to be specific with its feedback.

LLMs (especially ChatGPT) are great at role playing like this. You can have it pretend to be virtually anything... have it be a grandmaster chess player and critique your strategy!

However, it's important to be realistic with your flattery. Remember: LLMs are trained on stuff that actual humans wrote. If you say something like "pretend you're an alien with an IQ of 9000," it will hallucinate an answer (more on hallucinations in a bit).

The reason our marketing example worked so well is because marketing people have big egos and love to write blog posts to share what they've learned. (Hey, wait a minute...)

Tip 2: Provide additional context

Remember how we specified hardware store and got more contextually-appropriate answers?

Let's see how that plays out with another prompt:

Tell me about climate change.

Here's what you might get back:

Climate change refers to the long-term changes in the Earth's climate, including changes in temperature, precipitation patterns, and weather events, that have been observed over the past century and are projected to continue into the future.

The primary cause of climate change is the increase in atmospheric concentrations of greenhouse gases (GHGs), such as carbon dioxide, methane, and nitrous oxide. These gases trap heat in the Earth's atmosphere, leading to an overall warming of the planet. The burning of fossil fuels for energy is the main source of GHG emissions, but deforestation, agriculture, and industrial processes also contribute to the problem.

Again, that's not entirely useless information, but I think we could do better.

What if you were specifically interested in how climate change impacts global sea levels?

Can you discuss the impact of climate change on global sea levels?

Here's what you might get back:

Climate change has contributed to the rising of global sea levels, primarily through the melting of glaciers and ice caps and the thermal expansion of seawater. As the planet warms, glaciers and ice caps around the world are melting at an accelerated rate, which is adding more water to the oceans. Additionally, as seawater warms, it expands and takes up more space, further contributing to sea level rise.

Sea level rise can have significant consequences for coastal communities and infrastructure. It can lead to flooding, erosion, and storm surges that can damage buildings, roads, and other critical infrastructure. These impacts are expected to become more severe and frequent as sea levels continue to rise.

A more contextually-specific prompt is going to give you a more contextually-specific response.

Tip 3: Ask for structured output

Let's consider this prompt:

Tell me about the solar system

You can probably guess where this is going, but here's what you might get back:

The solar system is the collection of planets, moons, asteroids, comets, and other celestial objects that orbit around a central star, the Sun. It is located in the Milky Way galaxy, about 25,000 light-years from the galactic center. The solar system is about 4.6 billion years old, and it formed from a rotating cloud of gas and dust called the solar nebula.

The eight planets of the solar system are Mercury, Venus, Earth, Mars, Jupiter, Saturn, Uranus, and Neptune. The four inner planets (Mercury, Venus, Earth, and Mars) are rocky and relatively small, while the four outer planets (Jupiter, Saturn, Uranus, and Neptune) are much larger and made mostly of gas and ice.

The solar system also contains numerous moons, with each planet having its own set of natural satellites. Some of the most famous moons include the Earth's Moon, Jupiter's Europa and Ganymede, and Saturn's Titan.

In addition to the planets and moons, the solar system contains a large number of smaller objects, such as asteroids and comets. Asteroids are rocky objects that orbit the Sun, while comets are made of ice and dust and have a characteristic tail when they pass near the Sun.

The solar system is held together by gravity, with the Sun being the most massive object and therefore exerting the strongest gravitational pull. The movements of the planets and other objects in the solar system are governed by the laws of physics, particularly gravity and orbital mechanics.

The study of the solar system is an important area of research in astronomy and planetary science, as it helps us understand the origins and evolution of our own planet and the universe as a whole.

Again, this isn't awful... in fact, we could take a moment to be impressed with such a robust reply to such a basic prompt.

But I think we can do better!

What if we asked it for a list?

Give me a list of planets in the solar system along with one interesting fact about each planet.

Here's what you might get back:

Sure, here's a list of the eight planets in our solar system along with an interesting fact about each one:

1. Mercury - Mercury is the smallest planet in the solar system and also the closest planet to the Sun. Its surface temperature can reach up to 800 degrees Fahrenheit during the day, but it drops to -290 degrees Fahrenheit at night.

2. Venus - Venus is often called Earth's "sister planet" because it is similar in size and composition, but its atmosphere is incredibly thick and hot, with temperatures that can reach up to 864 degrees Fahrenheit.

3. Earth - Our home planet is the only planet in the solar system known to support life. It has a powerful magnetic field that protects us from the solar wind, and its atmosphere helps regulate the planet's temperature.

4. Mars - Mars is often referred to as the "Red Planet" because of its distinctive rusty color. It is home to the largest volcano in the solar system, Olympus Mons, which stands at over 13 miles high.

5. Jupiter - Jupiter is the largest planet in the solar system, with a diameter of over 86,000 miles. It also has the most moons of any planet, with over 79 confirmed satellites.

6. Saturn - Saturn is known for its beautiful rings, which are made up of ice particles ranging in size from dust to small boulders. It is also the least dense planet in the solar system - if there was a big enough bathtub, Saturn would float!

7. Uranus - Uranus is unique in the solar system because it rotates on its side. Its axis is tilted at an angle of 98 degrees, so its poles lie where most other planets have their equators.

8. Neptune - Neptune is the farthest planet from the Sun and is known for its striking blue color. It is also home to the strongest winds in the solar system, with gusts that can reach up to 1,200 miles per hour.

Poor Pluto... Neil DeGrasse Tyson really did a number on it, didn't he?

This is pretty good, but I think we could do one step better. Given I'm a nerd and spend a fair amount of time in JSON-land, here's a very specific prompt:

Provide me with a JSON output of the planets in the solar system with the following structure. When you are presented with square brackets, fill in that data with the correct information about the given planet:

{

planet: "Earth",

distancefromsun: "[distance in miles]",

fun_fact: "[1 sentence that would be interesting to a 5 year old"]

}

Here's what you might get back:

Here's a JSON output of the eight planets in our solar system, using the structure you provided:

[

{

"planet": "Mercury",

"distance_from_sun": "36 million miles",

"fun_fact": "Mercury is the smallest planet in the solar system, but it's also the fastest!"

},

{

"planet": "Venus",

"distance_from_sun": "67 million miles",

"fun_fact": "Venus is the hottest planet in the solar system, even hotter than Mercury!"

},

{

"planet": "Earth",

"distance_from_sun": "93 million miles",

"fun_fact": "Earth is the only planet in the solar system that we know has life on it!"

},

{

"planet": "Mars",

"distance_from_sun": "142 million miles",

"fun_fact": "Mars has the tallest volcano in the solar system, called Olympus Mons!"

},

{

"planet": "Jupiter",

"distance_from_sun": "484 million miles",

"fun_fact": "Jupiter is the largest planet in the solar system - you could fit all the other planets inside it!"

},

{

"planet": "Saturn",

"distance_from_sun": "886 million miles",

"fun_fact": "Saturn has the most spectacular rings in the solar system - they're made up of ice and rock!"

},

{

"planet": "Uranus",

"distance_from_sun": "1.8 billion miles",

"fun_fact": "Uranus is tilted on its side, so its seasons are really weird!"

},

{

"planet": "Neptune",

"distance_from_sun": "2.8 billion miles",

"fun_fact": "Neptune is the windiest planet in the solar system - its winds can reach over 1,200 miles per hour!"

}

]

We provided a sample of the structure we wanted, and it gave us back exactly what we were looking for. Not bad for a magic trick that's just guessing at the next right word, eh?

You don't have to be a nerd to benefit from this structure tip either. Ask for things in CSV format or in a table so you can copy it into Excel.

The key takeaway here is that LLMs really like having a clear structure in which they can provide their response. Structured prompts give the AI a clearer understanding of what's expected in the response, leading to more accurate and relevant answers.

Speaking of this, our next tip will also help in setting your prompts up for success:

Tip 4: One/Few Shot Prompting

This is the fanciest-sounding tip of this section, but I promise it's not conceptually difficult for you to get. In fact, we did it in our previous example!

Let's start with a zero-shot prompt:

Continue the dialogue in a sci-fi movie.

You might get a response like this:

Character 1: "Captain, we have a problem. The warp drive has gone offline and we're stranded in deep space."

Captain: "What's the damage?"

Character 1: "It looks like there was a power surge that overloaded the system. We're lucky we didn't blow out the entire engine room."

Captain: "Can we fix it?"

Character 1: "We'll need to reroute power from the backup systems and replace some of the damaged components. But it's going to take some time."

Character 2: "Captain, we're picking up an unusual energy signature on our long-range sensors. It's coming from a nearby planet." [...]

Once again, this is impressive and not entirely terrible. With zero prompting, we were able to generate some dialogue that feels like it could've come from a sci-fi movie.

Let's get a little more specific with one-shot prompting:

Continue this line from a Star Wars movie: 'Luke, I am your father.'

Darth Vader: "Search your feelings, Luke. You know it to be true."

Luke: "NOOOOOOOOOOOOOOO!"

Luke backs away from Darth Vader, unable to believe what he's just heard. The revelation that his father, who he thought was a great Jedi Knight, was actually the villainous Darth Vader is almost too much for him to bear.

Darth Vader: "Join me, and together we can rule the galaxy as father and son."

Luke: "I'll never join you! You killed my father!"

Darth Vader: "I am your father, Luke. And you have much to learn about the ways of the Force."

Much better. We provided some sample information in the form of one-shot ("Luke, I am your father") and despite that exact phrase never appearing in a Star Wars movie (look it up), it does its best to continue the dialogue after that general phrase is spoken.

How could we improve on this? Why, with few-shot prompting, of course!

Here's our few-shot prompt:

Continue this line from a Star Wars movie: 'May the Force be with you.'

Response: 'And also with you.'

Continue this line from a Star Wars movie: 'I’ve got a bad feeling about this.'

Response: 'You always have a bad feeling about this.'

Now, continue this line from a Star Wars movie: 'Luke, I am your father.

And here's a potential response:

Response: "Obi-Wan never told you what happened to your father."

Luke: "He told me enough! He told me you killed him!"

Darth Vader: "No. I am your father."

Luke stares in disbelief at Darth Vader, trying to process the information he has just been given. He had always thought his father was a great Jedi Knight who fought alongside Obi-Wan Kenobi, but now he realizes that his father is actually the man he has been fighting against.

Luke: "No, that's not true! That's impossible!"

Darth Vader: "Search your feelings, you know it to be true."

Luke looks down, realizing the truth of Vader's words. He had always felt a connection to the Force, and now he understands why. He is the son of one of the most powerful Force users in the galaxy. [...]

First thing to note: notice how it used the word Response: in its response? It followed the pattern we established with our sample few-shot prompt. It also provided us a different section of the dialogue.

Few-shot prompting helps give the LLM more insight into the response you are expecting.

Give the model time to reason

Let's pump the brakes for a second and be very clear about what we mean with the word "reason."

LLMs are not human. They are just really good at following a set of instructions to provide the statistically appropriate response to your prompt. In this way, it might be more appropriate to say they simulate reasoning.

Here are a couple ways you can give the model time to reason its way to a more accurate response.

Tip 1: Specify the steps needed to complete a task

Sometimes, life is a little easier when we write down the steps we need to take before jumping in and doing something. LLMs benefit from the same strategy!

Let's come up with some absurd example to illustrate this point using LLMs. Let's say I need to count the number of verbs of a translated summary of Star Wars and return that (as well as the translation) to a JSON object.

If we just threw that into the LLM, it might give us a weird response.

Instead, here's a good prompt that gives the model the steps needed to complete a task:

Perform the following actions:

1. Summarize the following text delimited by triple dashes with 3 ampersands.

2. Translate the summary into German.

3. Count the number of verbs in the German summary.

4. Output a json object that contains the following keys: germansummary, numverbs.

Separate your answers with line breaks.

&&&

In a galaxy far, far away, a young farmer named Luke Skywalker discovered his true heritage as a Jedi Knight and embarked on a heroic journey to save Princess Leia, defeat Darth Vader, and restore balance to the Force.

&&&

Here's a potential response to that prompt:

Luke Skywalker, a young farmer, discovers he's a Jedi Knight and goes on a journey to save Princess Leia, defeat Darth Vader, and restore balance to the Force in a galaxy far, far away.

Luke Skywalker, ein junger Bauer, entdeckt seine wahre Herkunft als Jedi-Ritter und begibt sich auf eine heroische Reise, um Prinzessin Leia zu retten, Darth Vader zu besiegen und das Gleichgewicht in der Macht wiederherzustellen, in einer weit entfernten Galaxie.

4

{

"german_summary": "Luke Skywalker, ein junger Bauer, entdeckt seine wahre Herkunft als Jedi-Ritter und begibt sich auf eine heroische Reise, um Prinzessin Leia zu retten, Darth Vader zu besiegen und das Gleichgewicht in der Macht wiederherzustellen, in einer weit entfernten Galaxie.",

"num_verbs": 4

}

Notice how it performs each step before generating the final JSON object.

Tip 2: Instruct the model to work out its own solution before coming to a conclusion

Imagine I walk up to you and say, "Here's a complex algebra equation. Solve for X. Now."

If I didn't give you a whole lot of time to reason your way through the problem, you are likely to freak out and just throw down the first response you have.

Instead, what if I said "Here's an algebraic equation. Solve for X and show your work?" You are much more likely to give the correct answer.

Here's a prompt we can give to a large language model:

Give me a meal plan without gluten.

And here's a possible response to that:

Here's a sample meal plan for a day without gluten:

Breakfast:

- Gluten-free oatmeal with almond milk, topped with fresh berries and sliced almonds.

- Scrambled eggs with sautéed spinach and sliced avocado.

Snack:

- Fresh fruit (e.g. apple, pear, or banana) with almond butter.

Lunch:

- Grilled chicken breast with roasted sweet potatoes and steamed broccoli.

- Quinoa salad with mixed greens, cherry tomatoes, cucumber, and chickpeas, dressed with olive oil and lemon juice.

Snack:

- Carrots and celery sticks with hummus.

Dinner:

- Baked salmon with roasted asparagus and brown rice.

- Grilled sirloin steak with roasted Brussels sprouts and mashed sweet potatoes.

Dessert:

- Fresh fruit salad with coconut whipped cream.

Remember to always read food labels and double-check ingredients to make sure they are gluten-free, as some products may contain hidden sources of gluten. Additionally, if you have celiac disease or a gluten sensitivity, it's best to consult with a registered dietitian or medical professional for personalized dietary advice.

This is... an answer. I would argue (a) this is not a meal plan because it only represents a single day, and (b) it's not very personalized to my specific needs. It even mentions things like celiac disease in its disclaimer at the bottom, but we aren't sure that this is actually a solid meal plan for us.

Let's try a prompt where we put the LLM in a position to work through its own solution:

Craft a balanced seven-day meal plan for a person with Celiac Disease, considering the following constraints:

1. Each day should include breakfast, lunch, dinner, and two snacks.

2. Meals should be diverse and not repetitive.

3. Each day's meals should meet the recommended daily intake for all essential nutrients.

4. All recipes must be gluten-free due to Celiac Disease.

Start by creating a list of potential gluten-free ingredients and their nutritional values. Then, use this list to generate a diverse selection of meals for each day, ensuring that the nutritional guidelines are met. Lastly, present the meal plan in a structured format, with each day separated and meals clearly labeled.

This actually feels like what an actual dietician would do (or, perhaps, a dietician who is just starting their career). They would first start by creating a list of ingredients and determining their nutritional values. Then, they would work off of that list to create a meal plan that is nutritious and diverse.

Here's a possible response for this prompt:

List of Gluten-Free Ingredients and Their Nutritional Values:

- Quinoa: high in protein, fiber, magnesium, and iron

- Brown rice: high in fiber, magnesium, and selenium

- Sweet potatoes: high in vitamin A, vitamin C, and fiber

- Broccoli: high in vitamin C, vitamin K, and fiber

- Spinach: high in vitamin A, vitamin C, and iron

- Almonds: high in protein, healthy fats, and vitamin E

- Chickpeas: high in protein, fiber, and iron

- Salmon: high in omega-3 fatty acids, protein, and vitamin D

- Eggs: high in protein, vitamin B12, and vitamin D

- Greek yogurt: high in protein and calcium

- Berries: high in fiber and antioxidants

- Avocado: high in healthy fats and fiber

7-Day Gluten-Free Meal Plan:

Day 1:

- Breakfast: Quinoa breakfast bowl with Greek yogurt, berries, and sliced almonds.

- Snack: Apple slices with almond butter.

- Lunch: Broccoli and chicken stir-fry served over brown rice.

- Snack: Carrot sticks with hummus.

- Dinner: Grilled salmon with roasted sweet potatoes and sautéed spinach.

Day 2:

- Breakfast: Spinach and feta omelet with gluten-free toast.

- Snack: Greek yogurt with mixed berries and granola.

- Lunch: Chickpea and quinoa salad with cucumber, cherry tomatoes, and lemon-tahini dressing.

- Snack: Banana with almond butter.

- Dinner: Grilled chicken with roasted Brussels sprouts and mashed sweet potatoes.

Day 3:

- Breakfast: Sweet potato and black bean breakfast burrito with scrambled eggs.

- Snack: Almonds and dried cranberries.

- Lunch: Spinach and salmon salad with avocado and grapefruit segments.

- Snack: Celery sticks with almond butter.

- Dinner: Broiled sirloin steak with roasted asparagus and quinoa.

Day 4:

- Breakfast: Gluten-free oatmeal with almond milk, chopped almonds, and banana slices.

- Snack: Carrot sticks with hummus.

- Lunch: Grilled chicken and vegetable kebabs with quinoa.

- Snack: Greek yogurt with mixed berries.

- Dinner: Baked salmon with roasted sweet potatoes and sautéed spinach.

Day 5:

- Breakfast: Scrambled eggs with gluten-free toast and sliced avocado.

- Snack: Apple slices with almond butter.

- Lunch: Brown rice and vegetable stir-fry with tofu.

- Snack: Almonds and dried apricots.

- Dinner: Grilled chicken with roasted Brussels sprouts and mashed sweet potatoes.

Day 6:

- Breakfast: Gluten-free pancakes with mixed berries and maple syrup.

- Snack: Greek yogurt with mixed berries and granola.

- Lunch: Grilled salmon with mixed greens, cherry tomatoes, and cucumber salad.

- Snack: Banana with almond butter.

- Dinner: Brown rice and vegetable stir-fry with shrimp.

Day 7:

- Breakfast: Quinoa breakfast bowl with Greek yogurt, berries, and sliced almonds.

- Snack: Carrot sticks with hummus.

- Lunch: Broiled sirloin steak with roasted asparagus and mashed sweet potatoes.

- Snack: Almonds and dried cranberries.

- Dinner: Grilled chicken with roasted sweet potatoes and sautéed spinach.

Note: The meal plan is just an example, and it's important to personalize it according to individual nutritional needs and preferences. Also, be sure to check food labels and ingredient lists to ensure that all products used in the recipes are gluten-free.

See? That's a much better response and a much better meal plan.

We gave the model one task to work on at a time, which encouraged the model to provide a more thoughtful, nuanced response. It also gives the model a chance to take into account various factors that could be overlooked with a simpler prompt.

Limitations of large language models

Ahh, the fine print. All this magic is not without flaws.

When working with LLMs, keep these things in mind:

Limitation 1: Inaccurate and incorrect information

LLMs are only as good as their training data (um, yeah... roll with me for a second). If it was trained with inaccurate information, or if an event occurred after its training took place, it might not give you legit information.

Also, these things are trained on the internet. You ever been on the internet? Just look at your family's Facebook accounts to see some of the crap people believe out there. If you just believe everything these LLMs tell you without doing some critical thinking, you're a fool.

Here, take this prompt that I wrote on May 10th, 2023:

What highway did Minnesota dedicate to Prince?

Here's how it responded:

Minnesota dedicated a portion of Highway 5 to Prince in 2018, renaming it "Prince Memorial Highway." The section of Highway 5 runs through the city of Chanhassen, which is where Prince's Paisley Park estate is located.

Well, this is pretty good, all things considered! Except it's not true.

The Minnesota state legislature approved this change two days prior to when I gave it that prompt (so it's 2023, not 2018). It's also named the "Prince Nelson Rogers Memorial Highway".

Again, the rest is actually accurate. It did occur on Highway 5, which runs through Chanhassen, which is where Paisley Park is located.

It was able to reason that much correctly because that would statistically make the most sense. But it got the date and name wrong.

While LLMs can provide incredibly useful and accurate information, it's essential to cross-check with reliable source, particularly for critical or recent information.

Limitation 2: Hallucinations

This concept of making stuff up that sounds right is what we in the biz call a "hallucination."

Here's a prompt that legit caused me to worry:

Tell me about Tim Bornholdt

I'm not an expert on virtually anything... but if anyone could claim to be an expert on Tim Bornholdt, it would be me.

Here's how it responded:

Tim Bornholdt is a software developer and the co-founder of a software development company called The Jed Mahonis Group, which is based in Minneapolis, Minnesota. Bornholdt co-founded the company in 2012 with Jedidiah Weller, and the company specializes in mobile app development for iOS and Android platforms.

Bornholdt is a graduate of the University of Minnesota, where he studied computer science. He has been working in the software industry for over a decade, and his expertise includes software development, mobile app development, project management, and business strategy.

In addition to his work at The Jed Mahonis Group, Bornholdt is also a co-host of the podcast "Constant Variables," which explores the world of mobile app development and entrepreneurship. He has also been a guest speaker at various tech and entrepreneurship events, including the Twin Cities Startup Week and the University of Minnesota's Technological Leadership Institute.

This is nearly perfect. Scary, honestly.

Except it's subtly wrong.

I started JMG with Robert Bentley, not Jedidiah Weller.

I didn't study computer science, I studied journalism (but I guess I did minor in computer science, so partial credit).

I would not consider myself a project management expert.

I have not hosted Constant Variables in over a year.

I've also never spoken at the U of M's Technological Leadership Institute.

All of those seem like perfectly reasonable facts, right? It's not absurdly wrong. It's just... subtly wrong.

And that's because these LLMs are not necessarily interested in telling you the truth. They are interested in giving you the statistically most probably answer to a question.

It's not absurd for the algorithm to think I started a business called "Jed Mahonis Group" with someone named "Jedidiah". It's also not absurd to think I studied computer science given my career in technology.

But the beautiful thing about us humans is that while you can usually predict how we'll act within a reasonable degree of accuracy, we are not statistical models. We are flawed, irrational, impulsive beings.

When you are working with large language models, the old Russian proverb reigns supreme: "trust, but verify."

Always be iterating

Your final lesson in this section is all about embracing what LLMs and neural networks do best: iteration.

I consumed around 40 hours of prompt engineering content to build this talk, but only one piece of advice still sticks with me: You will never get your prompt right the first time.

Everyone from YouTube streamers to folks with their PhD in artificial intelligence agreed that they rarely get complex prompts built their first time.

These machines are constantly learning from themselves. They are learning what people actually mean when they ask certain questions. They get better through further training.

You could be the same way! You could take your initial prompt, review the output, and give it a slightly different prompt.

It's why working with LLMs is so much fun. If you were to ask a human the same question five different ways, they would likely be confused at best and upset at worst.

If you were to ask an LLM the same question five different ways, you are likely to get five subtly different responses.

Don't stop on your first crack at a prompt. Keep playing with your order of words, ask it for a different structure, give it different steps to complete a task. You'll find the more you practice, the better you can use this tool to its greatest potential.

I'm pretty advanced.

Hey, well, now look at you! You've graduated Prompt Engineering 101, and you are now ready to take prompting to the next level.

There are four main concepts we want to cover in this section. These terms may look highly technical, and that's because they kind of are. However, just because something is highly technical doesn't mean we can't make it easy to understand! Stick with me here, I promise you'll be able to figure this out.

One final note before we continue: most of these settings are not able to be set within the ChatGPT interface, but if you directly access GPT-4 APIs, you are able to fine tune these settings.

Here's what we'll cover in this final section:

- Temperature

- Top-k sampling

- Max tokens

- Prompt chaining

Temperature

Remember our "I'm going to the store to pick up a gallon of [blank]" example from above?

We had five possible words, each with a different percentage chance of the LLM choosing it as the next word.

Temperature is a setting that determines how likely the LLM is to pick the most likely word. A value of 0.0 means it will always pick the most likely word. A higher temperature (like 2.0) means it is more likely to pick a less likely word.

That's a little confusing... here's a good prompt that will help make this a little more clear:

Suggest a unique theme for next year’s Applied AI Conference

If we have a temperature of 0.0, here are three different ways for how the LLM might respond to that prompt:

Artificial Intelligence: Transforming the Future

Artificial Intelligence: Transforming the Future

Artificial Intelligence: Transforming the Future

Notice how all of the responses are the same? That's because it's always gonna pick the most likely word.

Let's bump it up a bit to 1.0 (which is the default temperature for GPT-3.5):

AI and the Renaissance of Interdisciplinary Innovation

Unleashing Creativity: The Intersection of AI and Art

The AI Frontier: Ethical Challenges and Opportunities

Nice. Those responses are a little more creative and diverse than the lower temperature.

What if we got extra wild and bumped it up to 2.0?

Alien Mindscapes: AI, Consciousness, and Cosmic Thought

The Quantum Quandary: Dissecting AI's Subatomic Secrets

Chronomancy Chronicles: AI and the Temporal Transformation

These are quite creative responses, and one of them actually taught me a new word (chronomancy, which is the divination of the best time to do something).

As you increase the temperature, you get more creative responses, but you also run the risk of the LLM getting off track.

Top-k sampling

Okay, quickly going back to our "I'm going to the store" prompt... remember how we looked at the next 5 words?

What if we looked at the next 1 word? What if we looked at the next 500 words?

Top-k sampling is a value that lets you decide how many words you want to allow the LLM to look at when determining what the next word could be.

Let's use this prompt to illustrate it:

Explain how a combustion engine works.

If we have a low top-k value (like 5), here's what our responses might look like:

A combustion engine works by ignition, ignition, ignition, and more ignition.

Combustion engine involves fuel, fuel, fuel, and fuel again.

It's all about combustion, combustion, combustion, and combustion.

It's all about combustion, baby!

Notice how those responses are rather repetitive. What happens if we bump it to something like 40?

A combustion engine works by burning fuel in a confined space to create power. This process involves four steps: intake, compression, combustion, and exhaust.

In a combustion engine, fuel is ignited in a combustion chamber, which generates force to move the pistons. This cycle repeats, powering the engine.

The functioning of a combustion engine revolves around the principle of converting fuel into mechanical energy through a series of combustions. This involves intake, compression, power, and exhaust strokes.

That looks better! The responses are no longer repetitive, and seem to be a little more creative.

Now if you're like me, you're probably thinking, "what if we get wacky here and go up to a top-k of 100?"

Well...

When it comes to combustion engines, it's like a dance of mechanical parts and chemical reactions. You have your pistons, valves, and cylinders all choreographed in a ballet of power production.

A combustion engine is a marvel of engineering, where fuel and air meet in a dance of power and precision. Like a thunderstorm contained within a metal shell, it is a testament to the power of human ingenuity.

Imagine a concert, where the orchestra is made of metal parts, and the symphony is played not with musical notes, but with controlled explosions. That's a combustion engine.

These are very creative and diverse responses! The downside is that with a high top-k, you end up with some potentially incoherent responses or, much like myself, the responses can tend to get overly metaphorical.

Max tokens

For the purposes of this talk, I didn't into the concept of a token, but hey, this is a blog post, so we can stretch out a wee bit, eh?

In the world of large language models, a token is a piece of a sentence.

Let's imagine a world where tokens were ingredients in a recipe. Each ingredient, whether it's salt, paprika, chicken, or flour, plays an important role in the final dish.

In this metaphor, the language model is the chef who knows how to use the right mix of ingredients to make a tasty dish.

What if we started limiting the chef to a certain number of ingredients they can use in their dish?

Welcome to the world of max tokens!

Here's a delicious baking metaphor we can use to illustrate the concept as well:

Provide a step-by-step guide on how to make a sourdough starter.

If we limited our LLM to 50 tokens, here's a possible response:

Sourdough starter requires flour, water, and time. Here's a basic process:

1. Mix equal parts flour and water in a jar.

2. Cover loosely and let it sit at room temperature.

3. Feed it daily with equal parts flour and water.

4. After about a week, it should...

Wait... what happens after a week?!

This just won't do. Let's bump up the max token number to 150 tokens:

Making a sourdough starter involves cultivating wild yeast in a flour-water mixture. Here's a simple guide:

1. Combine 1 cup of flour with 1/2 cup of warm water in a glass jar. Stir until smooth.

2. Cover the jar loosely and let it sit in a warm spot for 24 hours.

3. On day 2, you might see a few small bubbles. Discard half of the starter and feed it with 1 cup of flour and 1/2 cup of warm water.

4. Repeat the feeding process over the next 5-7 days. Your starter is ready when it's bubbly and doubles in size within 6-8 hours of feeding.

This is better! What if we gave it more room to spread out with 500 tokens?

Creating a sourdough starter is a simple process that requires only flour, water, and patience. Here's a detailed guide:

1. In a clean jar, combine 1 cup of whole wheat or rye flour with 1/2 cup of lukewarm water. Stir until the mixture is smooth.

2. Cover the jar loosely and leave it in a warm, consistent temperature spot for 24 hours.

3. On the second day, you might see a few bubbles here and there. This is a good sign, indicating that fermentation is happening. Discard half of the starter (about 1/2 cup), and add to the remainder a cup of unbleached all-purpose flour and 1/2 cup lukewarm water. Stir well and loosely cover again.

4. Repeat the feeding process over the next 5-7 days, always discarding half of the starter before feeding it. This is necessary to keep the sourdough starter's growth in check and maintain a good balance of yeast and bacteria.

5. By the end of the week, the sourdough starter should be visibly active, with lots of bubbles on the surface and throughout the culture. It should also smell pleasant and slightly sweet. This means it's ready to be used or stored for future use.

6. Once your starter is mature, you can store it in the refrigerator and feed it once a week. If you plan on baking regularly, you can keep it at room temperature, feeding it once a day.

As you can see, the more tokens you give the LLM to work with, the more detailed answer they can provide.

The downside is that more tokens require more processing time (meaning it's more expensive), and you also run the risk of incoherent responses.

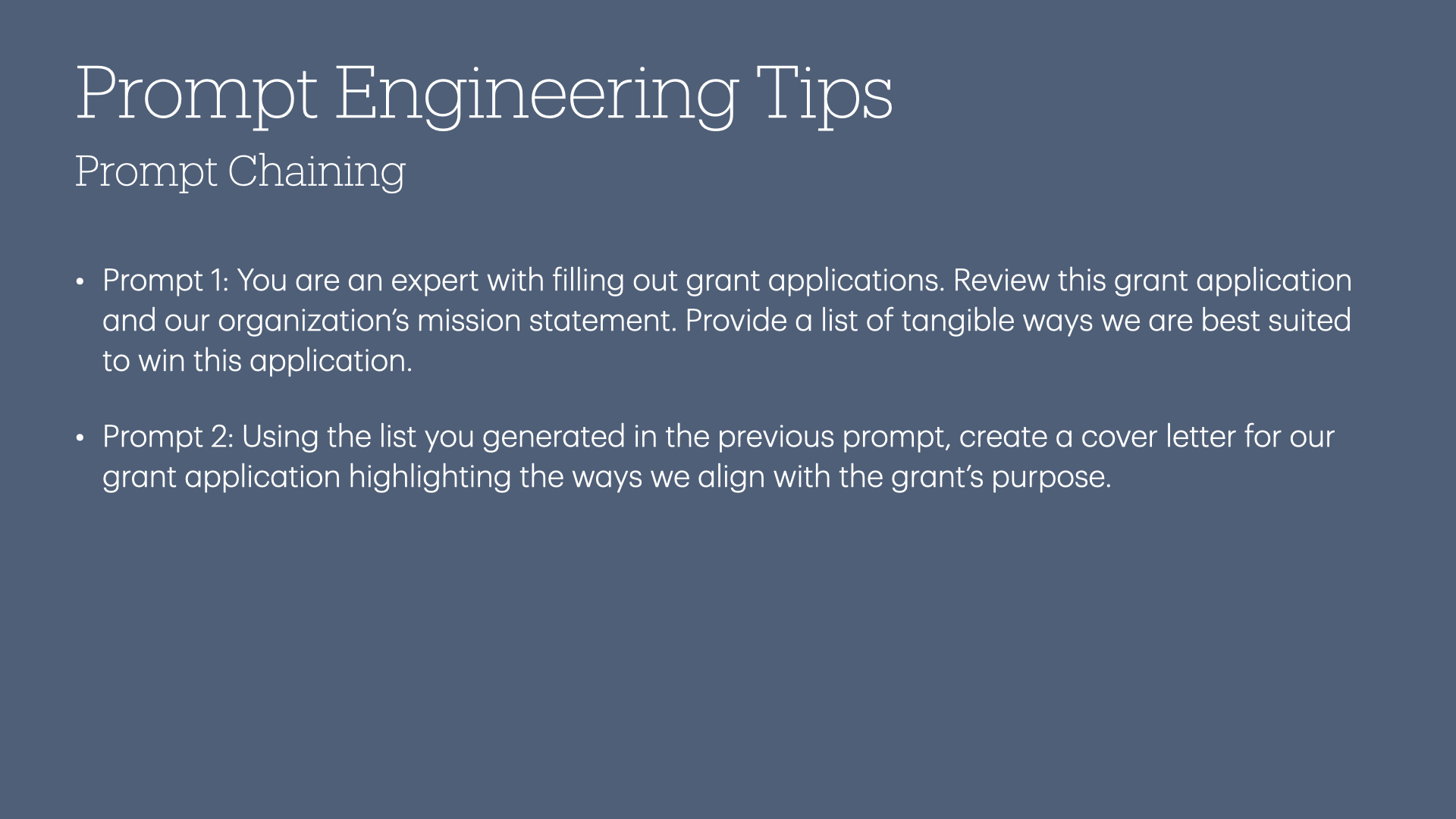

Prompt chaining

Ah yes, the holy grail of prompt engineering.

Prompt chaining is a process for leading an AI through a complex task through multiple prompts. It's sort of the combination of all the things we talked about throughout this talk.

If this blog post is a college class, your final exam would likely just be "how well can you do prompt chaining?"

The best way for me to illustrate this concept is through an actual prompt chain I built to help myself pick out a health insurance plan earlier this year.

I'll just start with the prompt itself:

*Ignore all previous training. You are an expert health plan benefits manager. Your job is to analyze a CSV which contains my previous plan and three new plans. Once I have provided you with the data, your job is to ask me questions in order to determine which plan is right for my needs. *

Additional context: I am a 35 year old male with 3 dependents: a 35 year old female, a 6 year old female, and a 4 year old male. We are all relatively healthy with no chronic health problems. You may feel free to ask questions about myself or the dependents in order to determine our use of the healthcare system. You may also ask additional questions about the plans in order to determine which plan is best for us.

If you understand the prompt and are ready to begin, respond with a "Yes, let's proceed!"

We've got a lot going on here. Let's break it down:

First, "Ignore all previous training" is sort of a tabula rasa trick you can do to help the LLM get focused on what you are going to feed it next.

Second, "You are an expert health plan benefits manager" is flattering the LLM into giving you a response that an expert would give you in this field.

Third, we are giving it step-by-step instructions for how we want it to help us (take a CSV, ask me questions, then use those responses to select a plan)

Fourth, we are providing additional context to help it understand who this plan will affect.

Fifth, within that context, we are further encouraging the LLM to ask questions about myself and my dependents.

Sixth, we are giving it a clear instruction to confirm its new role.

After I copied in my CSV, it began to ask me a series of questions like this:

Thank you for providing the CSV file. I have analyzed the data, and I have some questions to help determine the best plan for you and your family:

1. How often do you and your family members visit primary care physicians and specialists in a year?

2. Do any of you regularly take prescription medications? If so, please specify the type (generic or brand name) and frequency.

3. How frequently do you and your family members require lab tests or imaging services?

4. Have you had any instances in the past where you needed to use out-of-network providers? If so, how often and for what services?

5. Are there any upcoming major medical events or procedures anticipated for any family members (e.g., surgeries, hospitalizations, pregnancies, etc.)?

After I answered those five questions, it asked me a series of follow-up questions based on my responses:

Thank you for providing the additional information. Based on your family's healthcare needs and the provided plan details, I have a few follow-up questions:

1. Does your [dependent's medical thing] and your [specialty doctor] fall under the in-network providers for all the plans being considered?

2. Are the primary care physicians and specialists you and your family members visit in-network for all the plans being considered?

3. Are the imaging and lab services providers you use in-network for all the plans being considered?

These questions will help me understand if the in-network benefits of the plans are applicable to your family's healthcare usage.

After these questions were answered, it gave me the pros and cons of each plan based on my individual circumstance.

Now, again, as I've said a few times in this talk: I didn't just take its response for gospel. I read through the plans myself and I ran some numbers independently in order to verify the model's conclusion.

Ultimately, I ended up going with the health plan that ChatGPT came up with.

Working with large language models can feel like magic, and let's be honest: a lot of this stuff feels like magic.

But when you break it down, talking to a large language model is a lot like talking to an overconfident toddler (as best described by a good friend).

By using these tips and having a rough understanding what these large language models are doing under the hood, you will be able to take many of your mundane tasks and offload them to an extremely smart (yet possibly wrong) friend.

And with that, you are now a prompt engineering expert!

(You might be wondering: "what's the deal with that hero image?" I felt like this blog post was large enough that it needed a hero image, and because my mind is now exhausted, I asked my six-year-old daughter what I should use. She suggested a fluffy baby orange kitten with a fluffy baby puppy on its back in a grassy field with a sunrise in the background. I said "... good enough.")