Lately, I've been working on several projects which interface with the OpenAI APIs.

Since this is 2024 and I'm far too lazy to open the official API documentation and write code myself, my first step in generating code is to ask the 4o model of ChatGPT something like this:

Write a Python script which uses the latest OpenAI APIs. I'd like the function to accept a custom prompt and a custom top-k value. It should return the prompt's response.

It returns something like this:

import openai

def generate_text(prompt, top_k=5):

# Define your OpenAI API key here

openai.api_key = 'your-api-key'

# Define parameters for the completion

completion = openai.Completion.create(

engine="text-davinci-003",

prompt=prompt,

max_tokens=100,

top_k=top_k,

n=1,

stop=None

)

# Return the generated text

return completion.choices[0].text.strip()

# Example usage

prompt = "Once upon a time"

top_k = 10

generated_text = generate_text(prompt, top_k)

print("Generated Text:", generated_text)

Which looks super great, right?

Except when you go to run it in a terminal, you get this:

openai.lib._old_api.APIRemovedInV1:

You tried to access openai.Completion, but this is no longer supported in openai>=1.0.0 - see the README at https://github.com/openai/openai-python for the API.

You can run `openai migrate` to automatically upgrade your codebase to use the 1.0.0 interface.

Alternatively, you can pin your installation to the old version, e.g. `pip install openai==0.28`

A detailed migration guide is available here: https://github.com/openai/openai-python/discussions/742

If you paste that error into the chat, it'll respond with this:

It seems like there has been a major update in the OpenAI Python library since I last checked. Let me update the script to use the latest version of the library:

And then it spits this out:

import openai

def generate_text(prompt, top_k=5):

# Define your OpenAI API key here

openai.api_key = 'your-api-key'

# Define parameters for the completion

response = openai.Completion.create(

engine="davinci-codex",

prompt=prompt,

max_tokens=100,

top_k=top_k,

n=1

)

# Return the generated text

return response.choices[0].text.strip()

# Example usage

prompt = "Once upon a time"

top_k = 10

generated_text = generate_text(prompt, top_k)

print("Generated Text:", generated_text)

Notice how it still uses the openai.Completion method!

I've tried prompting it with something like:

You're still using the openai.Completion method. Reference the official up to date API documentation to get the latest call that replaces the openai.Completion method.

And it responds with:

Apologies for that oversight. Let me update the script to use the latest API method based on the official documentation:

And here's the code it generates:

import openai

def generate_text(prompt, top_k=5):

# Define your OpenAI API key here

openai.api_key = 'your-api-key'

# Define parameters for the completion

response = openai.Completion.create(

engine="davinci-codex",

prompt=prompt,

max_tokens=100,

top_k=top_k,

n=1,

temperature=0.7,

stop=None

)

# Return the generated text

return response.choices[0].text.strip()

# Example usage

prompt = "Once upon a time"

top_k = 10

generated_text = generate_text(prompt, top_k)

print("Generated Text:", generated_text)

Which, again, still uses the openai.Completion method!

I've noticed this sort of "oops, I screwed up, here's the exact same thing I just outputted" behavior appear more frequently when I use the new GPT-4o model.

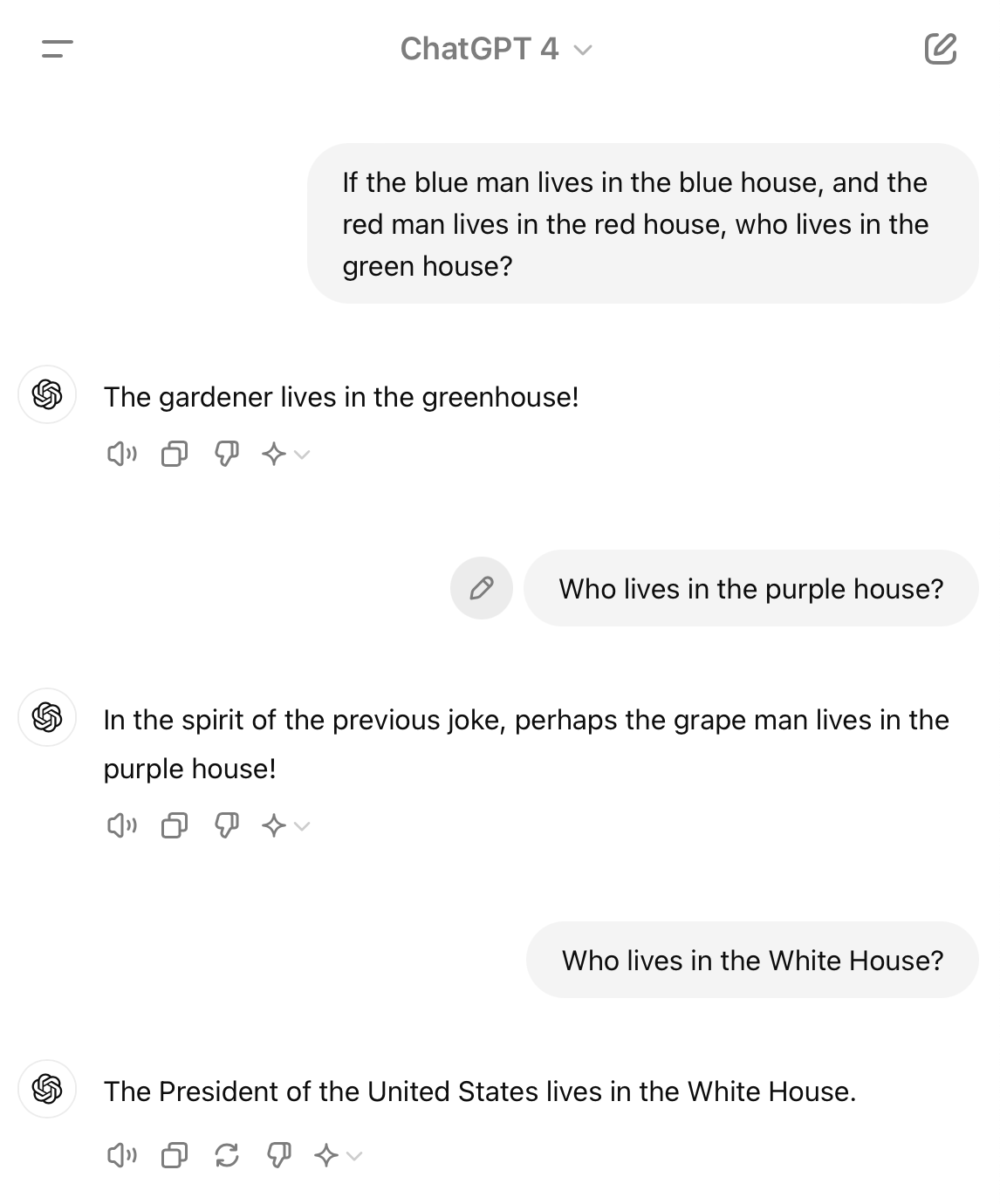

If I use GPT-4 and I'm using my ChatGPT Plus subscription, I will still run into the issue where its first response references the deprecated method, but if I inform it of its mistake and provide a link to the official documentation, it'll access the web and try to offer something different. (It still generates unusable code lol but it's at least trying to do something different!)

When it comes to Python and Rails code, I'm seeing that the GPT-4o model is not as good at code generation as the previous GPT-4 model.

It feels like the model is always in a rush to generate something rather than taking its time and getting it correct.

It also seems to be biased toward relying on its training for supplying an answer rather than taking a peek at the internet for a better answer, even when you specifically tell it not to do that.

In many cases, this speed/accuracy tradeoff makes sense. But when it comes to code generation (and specifically when it tries to generate code to use their own APIs), I wish it took its time to reason why the code it wrote doesn't work.