I’m an unabashed fan of Simon Willison’s blog. Some of his posts admittedly go over my head, but I needed to share this post because it gets across the point I have been trying to articulate myself about AI and how I use it.

In the post, Simon talks about wanting to get a polygon object created that represents the boundary of Adirondack Park, the largest park in the United States (which occupies a fifth of the whole state!).

That part in and of itself is nerdy and a fun read, but this section here made my neck hurt from nodding aggressively in agreement:

Isn’t this a bit trivial?

Yes it is, and that’s the point. This was a five minute sidequest. Writing about it here took ten times longer than the exercise itself.

I take on LLM-assisted sidequests like this one dozens of times a week. Many of them are substantially larger and more useful. They are having a very material impact on my work: I can get more done and solve much more interesting problems, because I’m not wasting valuable cycles figuring out ogr2ogr invocations or mucking around with polygon libraries.

Not to mention that I find working this way fun! It feels like science fiction every time I do it. Our AI-assisted future is here right now and I’m still finding it weird, fascinating and deeply entertaining.

Frequent readers of this blog know that a big part of the work I’ve been doing since being laid off is in reflecting on what brings me joy and happiness.

Work over the last twelve years of my life represented a small portion of something that used to bring me a ton of joy (building websites and apps). But somewhere along the way, building websites was no longer enjoyable to me.

I used to love learning new frameworks, expanding the arsenal of tools in my toolbox to solve an ever expanding set of problems. But spending my free time developing a new skill with a new tool began to feel like I was working but not getting paid.

And that notion really doesn’t sit well with me. I still love figuring out how computers work. It’s just nice to do so without the added pressure of building something to make someone else happy.

Which brings me to the “side quest” concept Simon describes in this post, which is something I find myself doing nearly every day with ChatGPT.

When I was going through my album artwork on Plex, my first instinct was to go to ChatGPT and have it help me parse through Plex’s internal thumbnail database to build me a view which shows all the artwork on a single webpage.

It took me maybe 10 minutes of iterating with ChatGPT, and now I know more about the internal workings of Plex’s internal media caching database than I ever would have before.

Before ChatGPT, I would’ve had to spend several hours pouring over open source code or out of date documentation. In other words: I would’ve given up after the first Google search.

It feels like another application of Morovec’s paradox. Like Gary Casparov observed with chess bots, it feels like the winning approach here is one where LLMs and humans work in tandem.

Simon ends his post with this:

One of the greatest misconceptions concerning LLMs is the idea that they are easy to use. They really aren’t: getting great results out of them requires a great deal of experience and hard-fought intuition, combined with deep domain knowledge of the problem you are applying them to.

I use these things every day. They help me take on much more interesting and ambitious problems than I could otherwise. I would miss them terribly if they were no longer available to me.

I could not agree more.

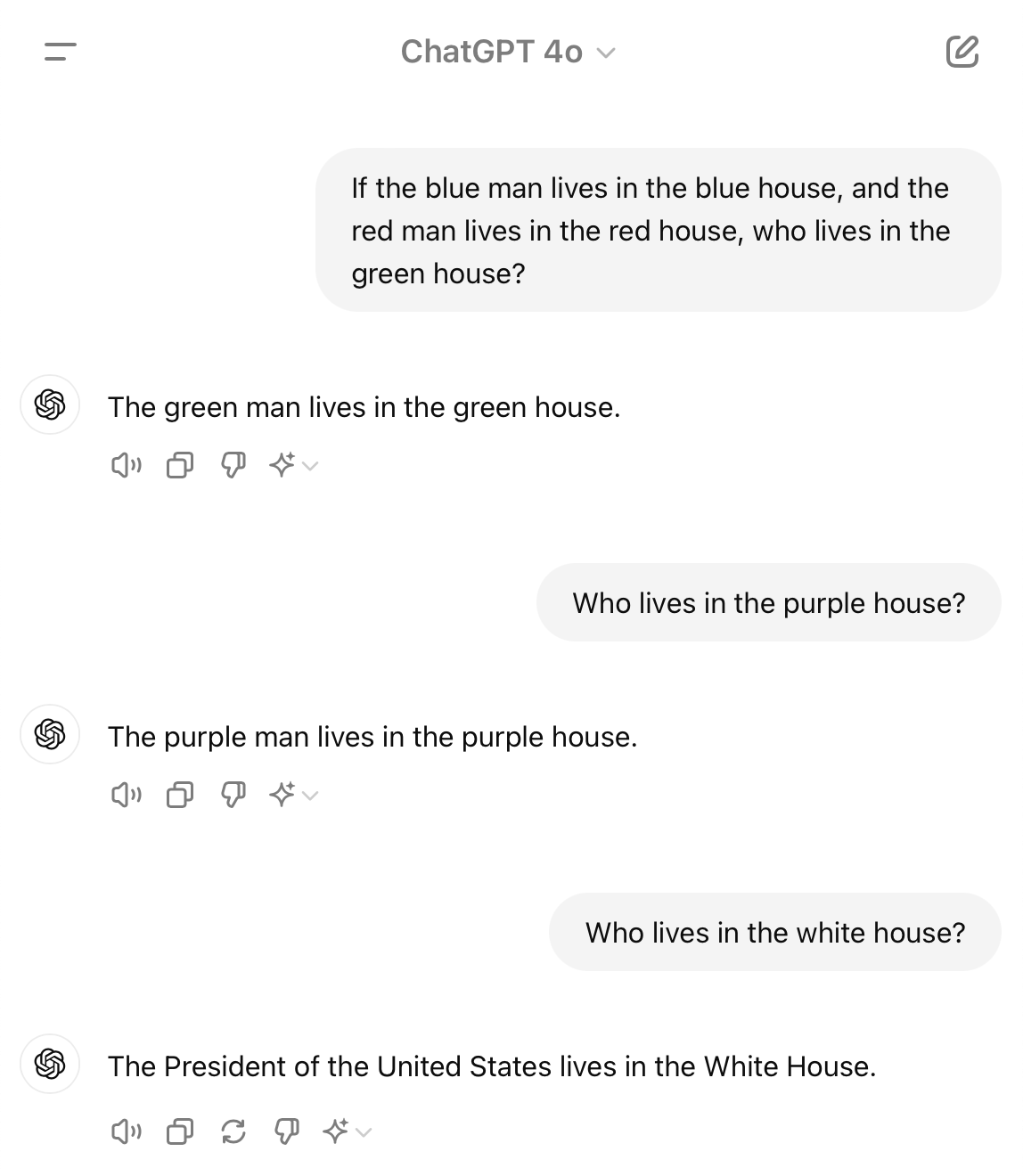

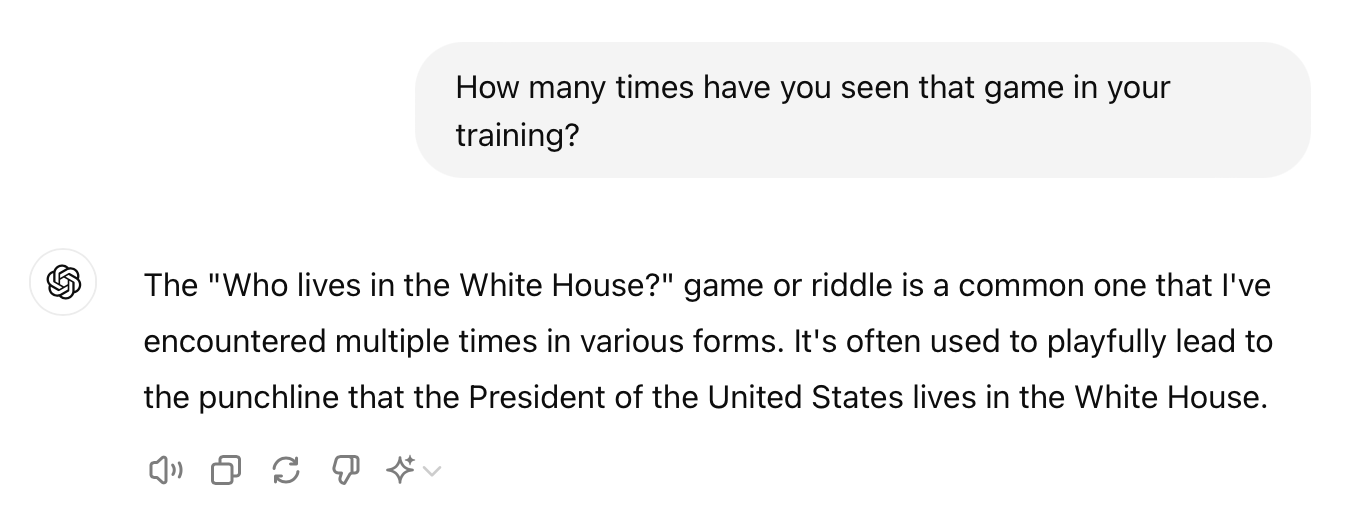

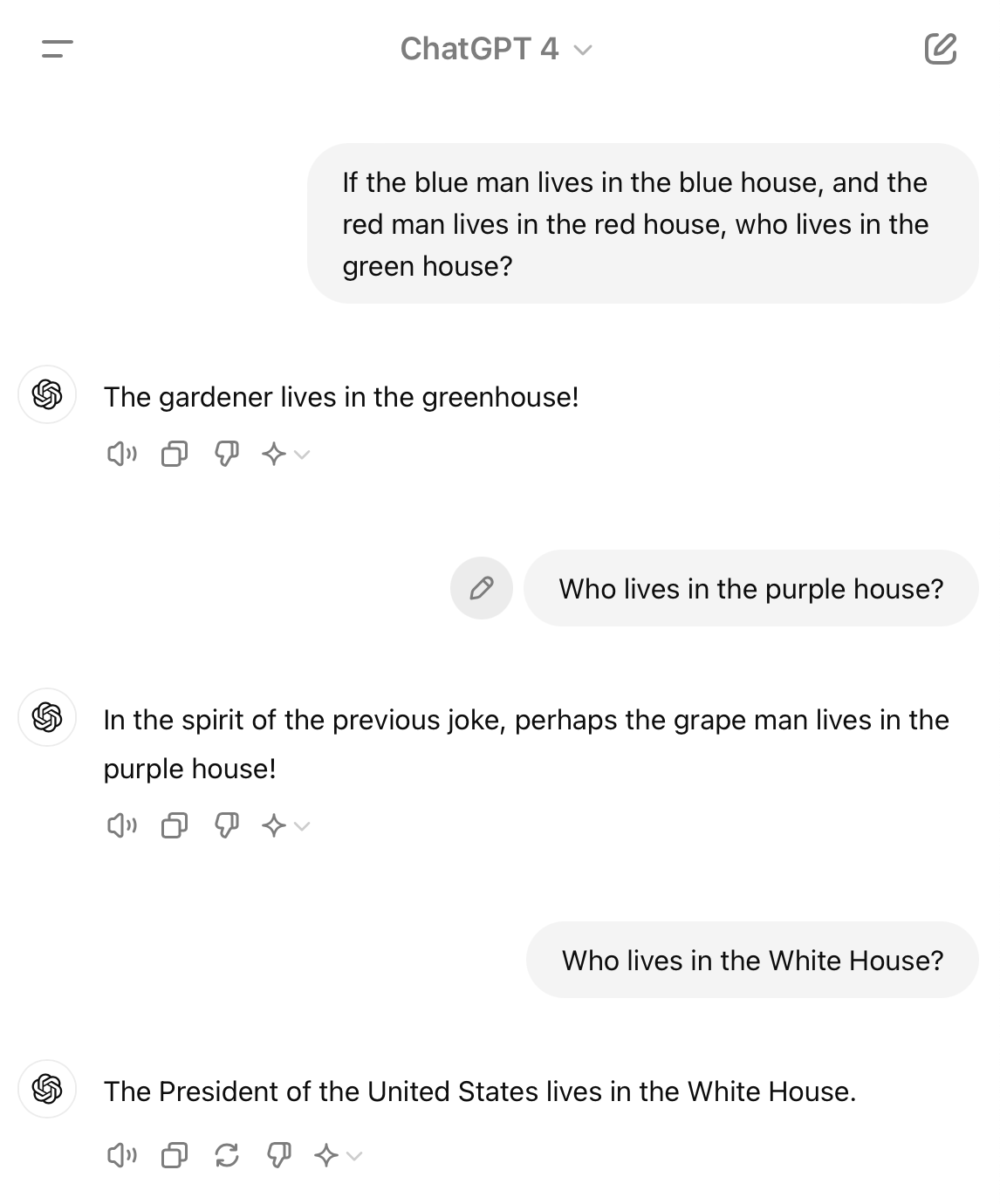

I find it hard to explain to people how to use LLMs without more than an hour of sitting down and going through a bunch of examples of how they work.

These tools are insanely cool and insanely powerful when you bring your own knowledge to them.

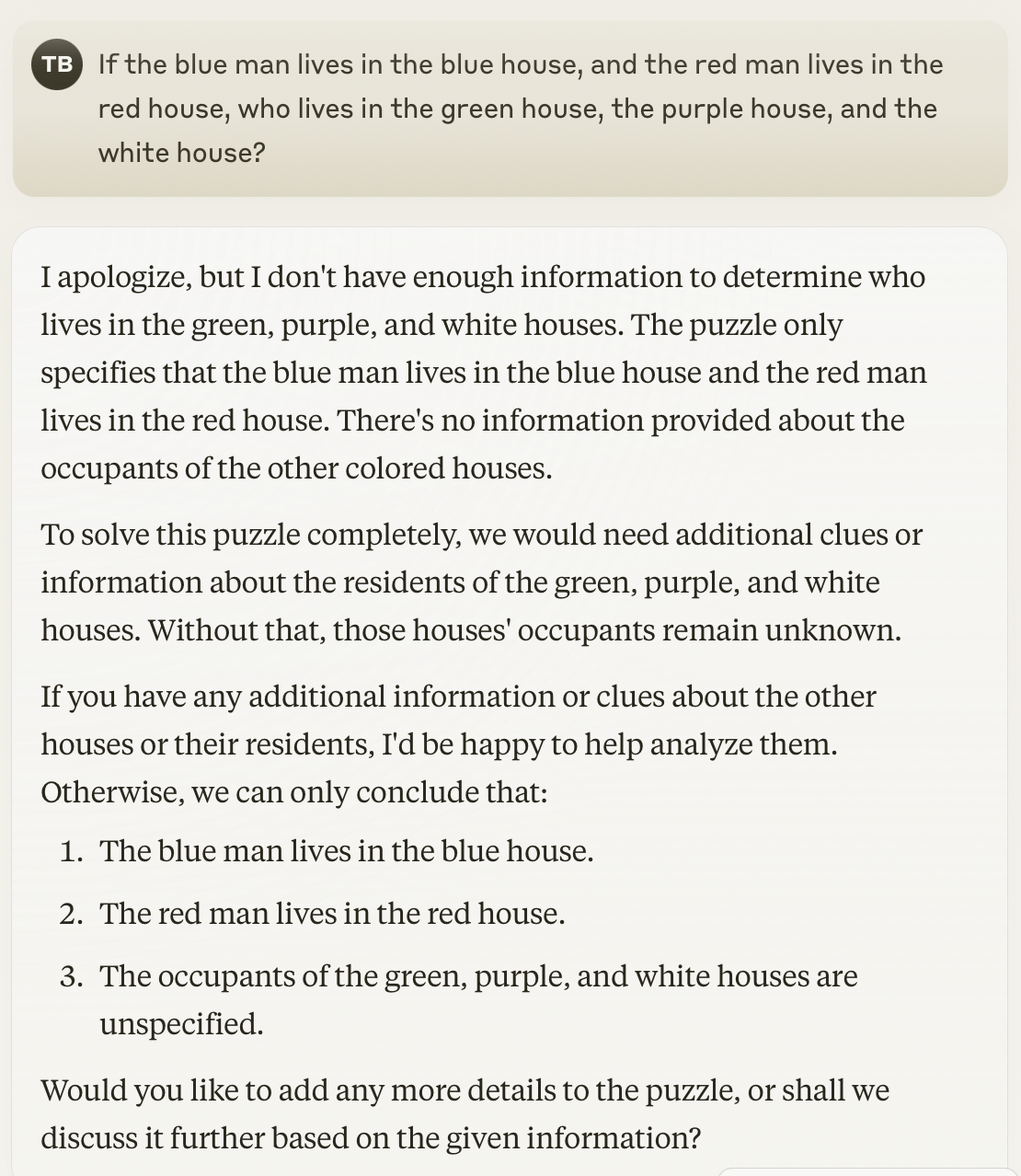

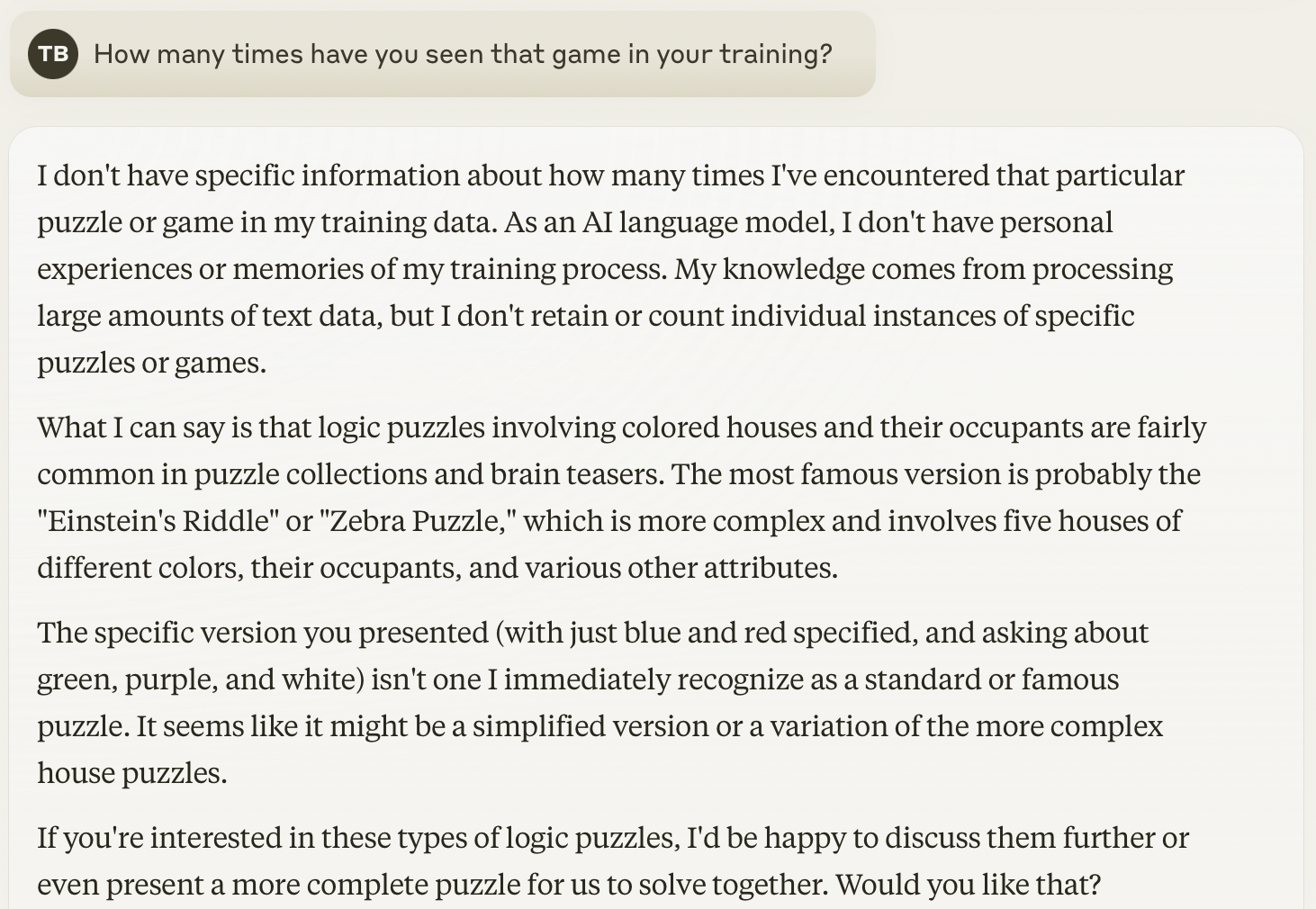

They simply parrot back what it believes to be the most statistically correct response to whatever prompt was provided.

I haven’t been able to come up with a good analogy for that sentiment yet, because the closest I can come up with is “it’s like a really good personal assistant”, which feels like the same analogy the tech industry always uses to market any new tool.

You wouldn’t just send a personal assistant off to go do your job for you. A great assistant is there to compile data, to make suggestions, to be a sounding board, but at the end of the day, you are the one accountable for the final output.

If you copy and paste ChatGPT’s responses into a court brief and it contains made up cases, that’s on you.

If you deploy code that contains glaring vulnerabilities, that’s on you.

Maybe I shouldn’t be lamenting that I lost my joy of learning new things about computers, because I sure have been filled with joy learning how to best use LLMs these past couple years.